Delphix data virtualization can provide the solution to numerous use cases for the provision and consumption of data and when you begin to work with the product you can find yourself coming up with all kinds of weird and wonderful ways to utilize its functionality often replacing existing costly solutions and doing so quickly.

Here’s a use case you may not have thought of, or at least you didn’t when you virtualized and provisioned your first VDB or vFile.

Warning: this post is a little more wordy than usual!

For the purpose of this post, Logical Data Corruption = corruption of application data by a user or application either accidentally or maliciously. Examples are

- updating rows with incorrect data

- updating the wrong rows

- unintentionally deleting rows

- accidentally dropping/truncating tables

- dropping columns from the wrong table

More often than not this kind of corruption happens during or after an application or database change. Rarely does it happen during normal operation because the application is designed to protect against such events and adequate security measures are in place to ensure someone can not maliciously cause a corruption. However, the possibility always exists and the means to recover from these situations must be in place.

The Scenario

The scenario is based on a real life event that I was involved in not so long ago. The event was something that many production support DBAs would have been involved in at some point during their career and usually at daft o’clock in the morning via the on-call phone – the call sounding something like “… we’ve lost data, HELP!”

In my case the call didn’t come through at 2am on a Sunday but rather 10am Monday morning and that’s because the production support DBAs had already been contacted over the weekend and had been working on the situation. The details of this particular issue were that a database change had been implemented over the weekend and, for reasons not known at the time, three relatively small tables had been dropped when they shouldn’t have been. A perfect example of logical corruption.

This was an Oracle database and I’m not sure whether restore points had been used or not but I was told there were none available and rolling back the database was not an option at this point. The environment included one Data Guard physical standby database used as a DR system and there was no lag between the primary and standby database, therefore no use to us here.

The normal procedure from here would be to restore a backup of the database to the point in time of just before the table drop, run an export of the lost tables and then import back into production. The restore would have to be to some other server – we can’t restore a whole database back onto production.

For small databases it can be a fairly quick and easy process but for very large databases this is often not the case. Very large databases often only have maybe one or two days worth of backups on disk with the rest being on “tape” and finding another server to restore to that has the storage capacity to hold the restored database can be a challenge.

Although this was a tier zero service the urgency was not as high as it could have been due to the fact it was a batch processing environment and the next batch was not due to run until the Monday night. If this would have been a tier zero real-time OLTP environment then the call probably would have come through to me at 2am on Sunday!

The Design

As I said at the start, the possibility of logical corruption always exists and the means to recover from such a situation must be in place.

Scheduled application and database changes always have a detailed backout plan in place and standard practice is to have at least one of various ways to recover from an accident in that plan. Certain scenarios of unintentional corruption under normal operation are also covered by standard database features and backup and recovery strategies.

As with all systems, the importance of the system defines how far you go with these recovery strategies, mainly because cost and complexity goes up with every recovery option implemented. Tier zero systems have the highest requirements for data loss (RPO’s of zero and RTO’s of near zero are not uncommon) and therefore have the largest budgets to meet these requirements but the business would be much happier spending cash on adding business value rather than keeping things going.

A design I’ve seen for the recovery from logical corruption with Oracle databases is to have a Data Guard physical standby in place with a lag. This means the standby database does not recover redo in real time but lags behind the primary database by a specified amount of time. The amount of lag depends on the requirements of the system but something like 12 or 24 hours is often the number dreamed up.

The Implementation

So what does this give you and how do you use it to recover?

The idea behind the design is simple. When a logical corruption occurs, someone will notice it (you hope) within the timeframe of the lag and kick the DBA into action stations. The DBA will work on the standby database and recover it to the moment before the logical corruption of the primary database occurred. The DBA will then extract the data (Data Pump export of rows/tables) from the standby and load the data back into the primary database. The DBA will then put his/her feet up after huge thanks from the service owner and a promise of a promotion. Ok, this last bit may not happen!

Disadvantages

What’s the downside? For Oracle we’re using Data Guard and, although a great feature, for this use case is a huge waste of storage. Remember it is a PHYSICAL copy of the primary database so we need to match the storage allocation on the standby server with the primary. If we have a very large database, which tier zeros often are, then that’s a monthly spend for the service owner they would rather do without. Also, once the recovery has taken place you’ll most likely need to rebuild the standby database and that takes time and, although not so commonplace these days, synchronization issues can render the standby useless and right at the time you need it.

And finally, you are relying on the logical corruption being detected within the timeframe of the lag. You could just make the lag longer but it is a trade off between the window for detection of corruption and the amount of time it can take to recover the standby.

Alternative Solution

Back to my real world scenario – Delphix to the rescue. The database in question was around 10TB in size and just so happened to be using Delphix to provision its Operational Acceptance Testing environment. The dSource was using a nightly snapsync and logsync (archivelogs only) and keeping both the snapsyncs and logs for 3 days. The logical corruption occurred during a planned change on the previous Friday night but it wasn’t noticed until sometime on the Sunday and I got the call on the Monday morning.

It’s worth saying now that me getting the call was not a shot in the dark by the project team to see if Delphix could help. I had already been in discussions with the project team about using Delphix in a design for this very scenario. They were early in the process of implementing Data Guard but I had stepped in when I had the bright idea of using Delphix instead.

So the call I received asked that although we hadn’t yet implemented Delphix for the use case of recovery from logical corruption, do we have the capability to do it now. After a quick check of the dsource my answer was a resounding YES!

Recovery From Logical Corruption Using Delphix

So how do we replace Data Guard with Delphix for this use case?

This is the easy bit. As with a physical [standby] database we need a server to host a VDB but this is a simple case of running up a virtual machine on the ESXi host/cluster we already have in place hosting the Delphix engine. This environment is a hardened production environment with production like access controls in place. Why? Because we are hosting production data here (when we provision non-production VDBs they have been through a masking process so the hosts for these are generally in non-production environments – here we will provision a VDB with unmasked production data). Obviously care has to be taken to ensure it is sized correctly so it is worth knowing what kind of recovery we may need to do. We know for Oracle we will use Data Pump to extract the data so maybe 4 vCPUs and just a few GB of memory would give us the export performance we need for even very large tables. A bit of testing here is a good idea.

We then add the virtual server to the Delphix engine hosting the dSource and we now have an environment where we can spin up a VDB quickly.

Save The Day

It’s now Monday morning @ 10:15am and I’ve informed the application team that I can help. Before I committed to this I had already been informed that the drop of the tables took place just after 10pm on the Friday and so checked the dSource to ensure I had the required snapsync and logs still available, which I did, just (there was a three day retention applied to this dSource).

Next I needed a target server to use. This application had two servers already used by Delphix as targets, which were hosting the project teams OAT environment. The VDBs hosted on these were utilizing all the available memory on each server so the only way we could start a new VDB would be to pick a server and shutdown the currently running VDB. Not a problem – whenever there’s a production problem all non-production activities take a very distant second place.

One more thing worth mentioning here. The production database had huge redologs of 15GB, configured as three groups of three members. The slowest part of the VDB provision process for this database was the creation of the redologs. Delphix has to create 3 * 3 * 15GB of files when provisioning the VDB so rather than seeing a standard provision time of say 5 minutes, these VDBs could take well over half an hour. We’re in a time critical situation so we want to save every minute we can. For this case we don’t need the production like redolog sizes or even close to it. We will only extract data from this VDB so there will be minimal redo.

So here we go, let’s save the day. Using the Delphix virtualization admin GUI, pick the dSource and hit the provision button. Knock the slider over to allow us to provision from logsync and pick the time/date as Friday@10pm. Pick the correct server (where we have already disabled the VDB normally hosted here) and give the new VDB a new name. Go through all the memory settings reducing the numbers (20GB of memory_target is more than sufficient here) and importantly reconfigure the redolog sizes bringing the size down to 500MB each. Press the finish button and grab a coffee. Well, you may not even have time to grab a coffee. In less than 5 minutes we’ve just provisioned a production copy of a 10TB database!

Once Delphix had completed the provision process I informed the production DBA who then proceeded to perform the export of the dropped tables and import back into production. By midday the production database was fixed and verified, I’d dropped the temporary VDB and enabled the OAT VDB. All back to normal and Delphix was hailed a hero!

A Permanent Solution

To use Delphix as a permanent solution for recovery from logical corruption there’s a couple more things to think about and it’s all about availability. The situation we want to avoid is where there’s a problem with the supporting infrastructure just when we need it. We need to ensure the availability of the Delphix engine and the target server as well as the snapsyncs and logs. If any of these are unavailable we can’t provision the VDB.

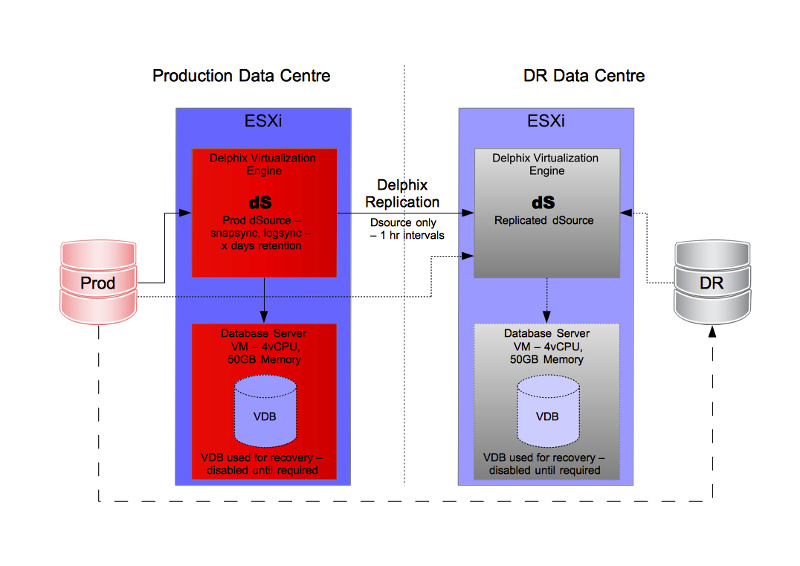

Here we have a simple diagram showing an example of how to implement a permanent solution. The key points are:

- create mirror image infrastructure in a secondary data centre to use as DR

- utilise existing hypervisors to host database servers

- use Delphix replication to copy the dSource from one data centre to the other

- create VDB to be used for recovery

- VDB to be in a permanent disabled state so as not to waste resources

- assumed that prod database has a DR copy using its own replication (SRDF, Data Guard, etc)

The design provides the ability to recover in the event of production side infrastructure failures or even total loss of the data centre. When invoking the DR side the option exists to repoint the dSource to the Prod database (if it is still available) or point it at the DR database (if we’ve completely lost the prod side).

If you had to build this from scratch just for this use case then it may become cost prohibitive but the likelihood is the infrastructure already exists. If it does then this is a simple and cost effective solution and arguably easier to invoke in a logical recovery situation.

I’ve heard anecdotally that there are many Delphix customers who have saved the day using Delphix in this way but I suspect it has not been based on an purposely implemented design like this. Without a design it can’t be relied upon or written into any SLA. For it to be recognised as a genuine usable solution then something like the above must be implemented otherwise it will only ever be a “best endeavors” kind of use case, which for some maybe perfectly adequate.

Matt is a technology consultant with over 20 years experience helping organisations around the world achieve data success using proven and emerging technologies. He is the Principal Consultant and Head Trainer at Kuzo Data.

Connect with Matt on LinkedIn.